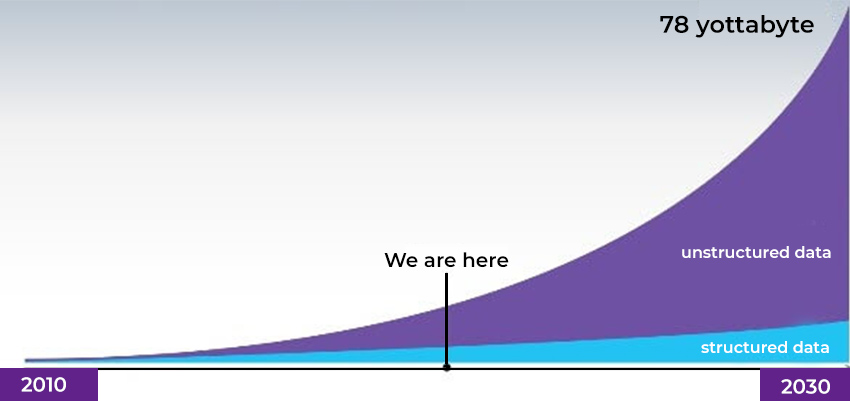

Over 90% of the world’s data has been created in the last two years, and with 2.5 quintillion l bytes of data generated daily, it is crystal clear that the future will be filled with more data, which can also mean more amount of data problems in real-time.

While it is obvious that companies can benefit from the increase in data, we must be cautious and aware of the challenges of Big Data storage that lie ahead.

Fortunately, there are practical solutions that companies can implement to overcome their data problems and thrive in the data-driven economy.

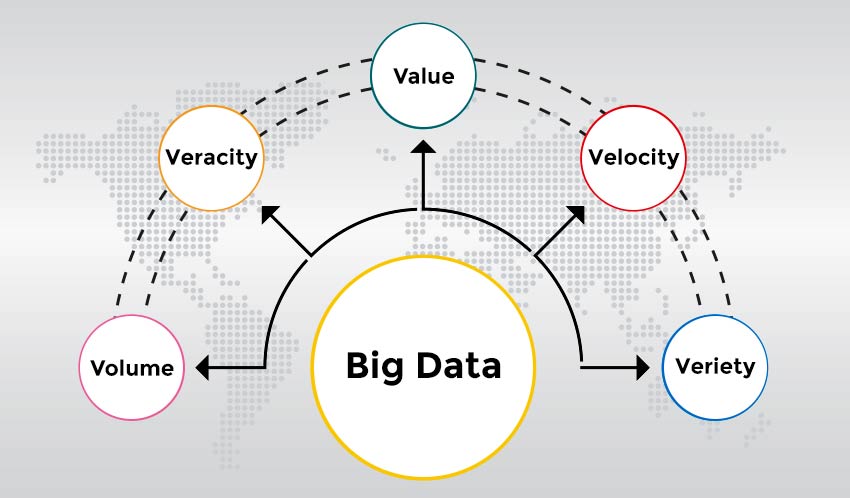

Before exploring the most common Big Data issues, let us understand the term “Big Data.”

What is Big Data, and What are the 9 Challenges for a Data-Driven company?

Big Data is a massive collection of data that is growing exponentially over time. It is huge sets of data that is so large and complex that traditional data management tools cannot store or process it efficiently. Big Data is a type of data that is extremely large in size.

Bringing Big Data initiatives to fruition necessitates a diverse set of data skills and best practices. Here are nine Big Data challenges that companies must prepare for.

1. Lack of Understanding

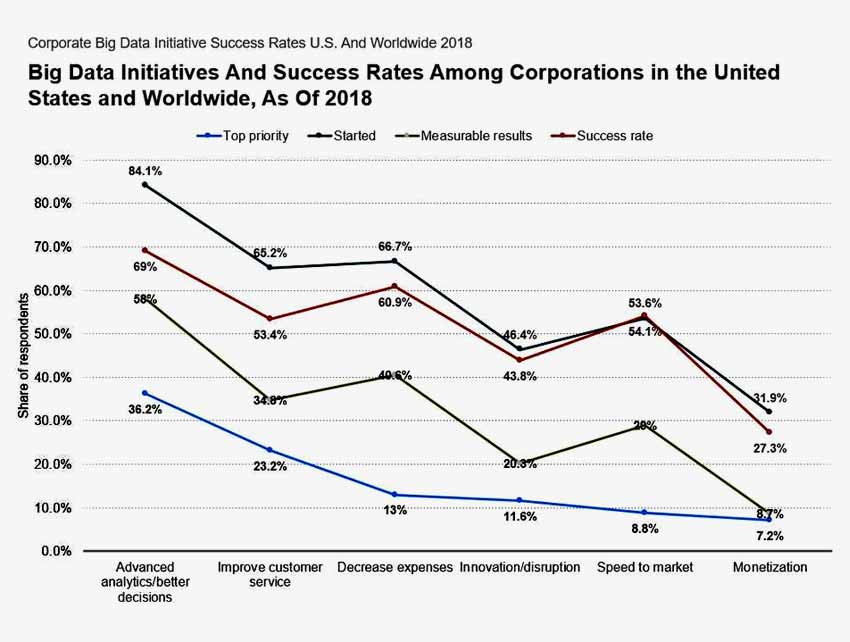

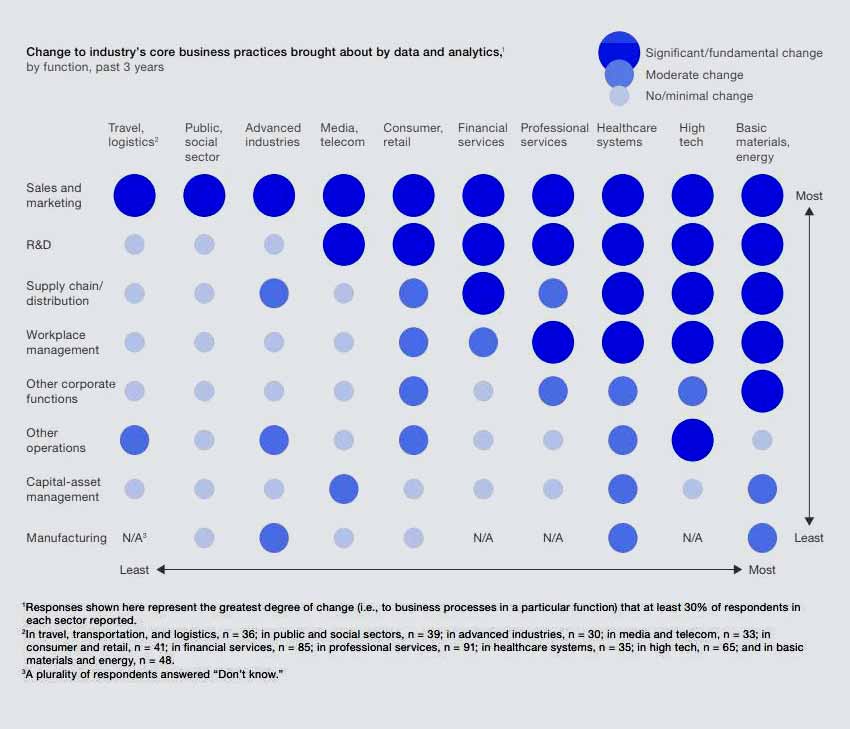

Data can help businesses improve their performance in different ways. To name a few, some of the best data use cases are:- cost reduction, innovation, product launch, increased profitability, and efficiency.

Despite the benefits, businesses have been slow to adopt data technology or develop a strategy for cultivating a data-centric culture.

Solution:

As a significant change for a company, Big Data should be accepted first by top management and then rolled out to the rest of the organization. IT departments must organize a plethora of training and workshops to ensure Big Data project understanding and acceptance at all levels.

To increase the acceptance of Big Data, the implementation and use of the new Big Data solution must be monitored and controlled.

2. Managing Big Data

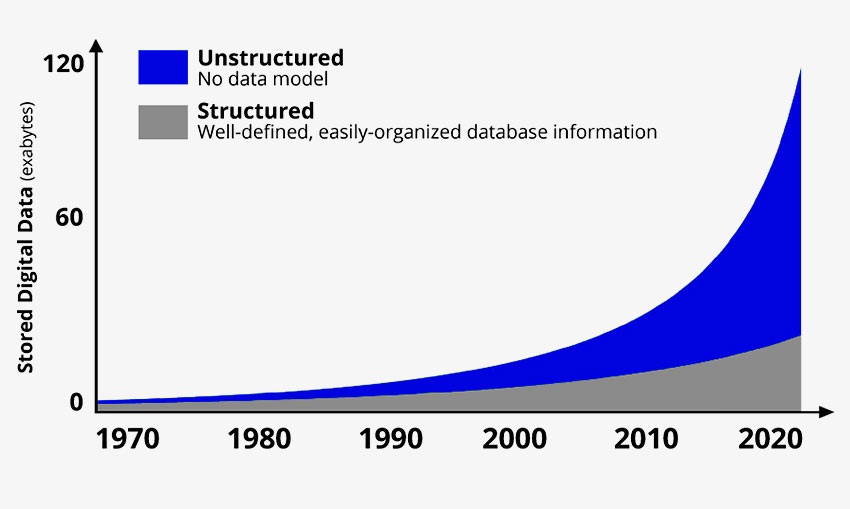

The rate at which Big Data is generated is rapidly outpacing the rate at which computing and storage systems are developed. According to a recent report, the amount of data available by the end of 2020 will be sufficient to fill a stack of tablets measuring 6.6 times the distance between the moon and the earth.

Handling unstructured data is becoming increasingly difficult – from 31% in 2015 to 45% in 2016 and 90% in 2019. According to analysts, the amount of unstructured data generated each year increases by 55% to 65%.

What is Unstructured Data?

Unstructured data cannot be easily stored in traditional column-row databases or spreadsheets like Microsoft Excel tables.

Solution:

Businesses can use unstructured data analytics tools designed specifically to assist Big Data technology users to extract insights from unstructured data. AI is at the heart of these tools.

“Artificial intelligence, Big Data, and machine learning are helping us reduce risk and fraud, upgrade service, improve underwriting and enhance marketing across the firm.”

-Jamie Dimon, Chairman and Chief Executive Officer at JPMorgan Chase

Businesses can generate meaningful information from large volumes of unstructured data generated on a daily basis using AI algorithms.

3. Data Governance and Security

Big Data entails dealing with data from a variety of sources. The majority of these sources employ unique data collection methods and formats. As a result, inconsistencies are common, even in data with similar value variables. Even making adjustments is difficult.

Even though the accuracy of Big Data isn’t a critical issue, it doesn’t give companies the right to ignore the dependability of our data.

What is Big Data Security?

Big Data security is the protection of Big Data from unauthorized access.

Data may not only contain incorrect information, but it may also contain duplication and contradictions. As we already know, low-quality data cannot provide useful insights or assist in identifying precise opportunities for managing your business tasks.

So, how can data quality be improved?

Solution:

The market is brimming with data cleansing techniques. But first and foremost, a company’s Big Data must have a proper model in place, and only after that can you proceed to do things like:

- Making data comparisons based on the single point of truth, such as comparing contact variants to their spellings in the postal system database.

- Matching and merging records from the same entity.

Another thing that businesses must do is define data preparation and cleaning rules. Automation tools can also be useful, particularly when dealing with data preparation tasks.

Furthermore, identify the data your company does not require and then integrate data purging automation before your data collection processes to eliminate it before entering your network.

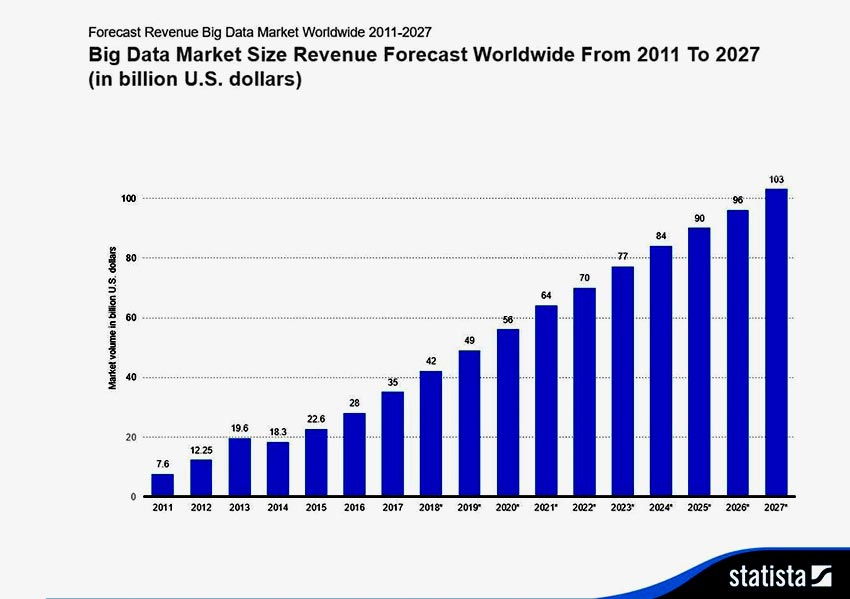

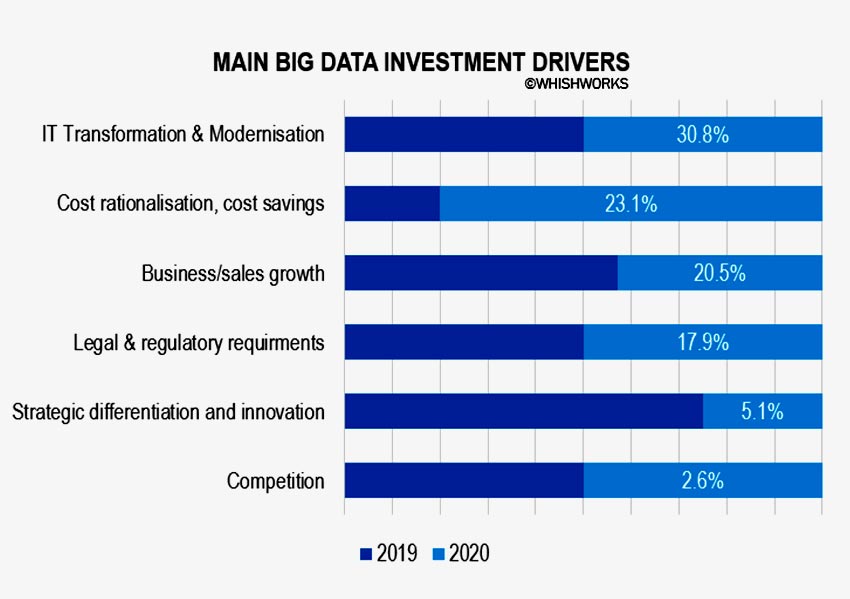

4. Huge Investment

Big Data adoption projects are expensive. If you choose an on-premises solution, you must account for the costs of new hardware, new hires, electricity, and so on.

If you choose a cloud-based Big Data solution, you will still need to hire people and pay for cloud services, Big Data solution development, and the setup and maintenance of necessary frameworks.

Furthermore, you must plan for future expansions to avoid Big Data growth becoming out of control and costing you a fortune in both cases.

“Deep learning craves Big Data because Big Data is necessary to isolate hidden patterns and to find answers without overfitting the data. With deep learning, the more good quality data you have, the better the results.”

– Wayne Thompson, SAS Product Manager

Solution:

The specific salvation of your company’s wallet will be determined by your company’s specific technological requirements and business goals. Companies that want flexibility, for example, benefit from cloud computing. While businesses with extremely stringent security requirements go on-premises.

There are also hybrid solutions, in which parts of the data are stored and processed in the cloud and parts on-premises, which can be cost-effective.

Additionally, using data lakes or optimizing algorithms can save money:

- Data lakes can offer low-cost storage for data that you don’t need to analyze right now.

- Optimized algorithms, in turn, can reduce computing power consumption by 5 to 100 times.

The most common feature of Big Data is its explosive growth. And one of the major challenges with Big Data is precisely this.

5. Upscaling difficulties

The design of your solution can be thought through and adjusted for upscaling with no additional effort. But the real issue isn’t the process of adding new processing and storage capacities.

It is the complexity of scaling up so that your system’s performance does not degrade while remaining within budget.

Solution:

The first and most important safeguard against such Big Data challenges is a well-designed Big Data solution architecture. As long as your Big Data solution can boast such a feature, there will be fewer problems later on. Another critical step is to design your Big Data tools with future scalability in mind.

Aside from that, you must plan for your system’s maintenance and support to ensure that any changes brought about by data growth are appropriately addressed.

6. Too many options

Less is more, according to psychologist Barry Schwartz. Schwartz defines the “paradox of choice” as to how option overload can lead to buyer inaction. Anxiety and stress can instead be reduced by restricting a consumer’s options.

What is Compression Tiering?

There are two compression-related compression technologies: compression tiering and deduplication.

Deduplication is a compression technology that will “dedupe” identical blocks in a volume to save space by only storing one copy on a disk. Compression tiering provides compression ratios at different levels based on the importance of data to an organization.

In the world of data science and data tools, the options are almost as numerous as the data itself, so deciding on the solution that is right for your business can be overwhelming, especially when it will likely affect all departments and hopefully be a long-term strategy.

Solution:

A good solution, like understanding data, is to leverage the experience of your in-house expert, perhaps a CTO. If that is not an option, hire a consulting firm to help you make a decision. Use the internet and forums to find useful information and to ask questions.

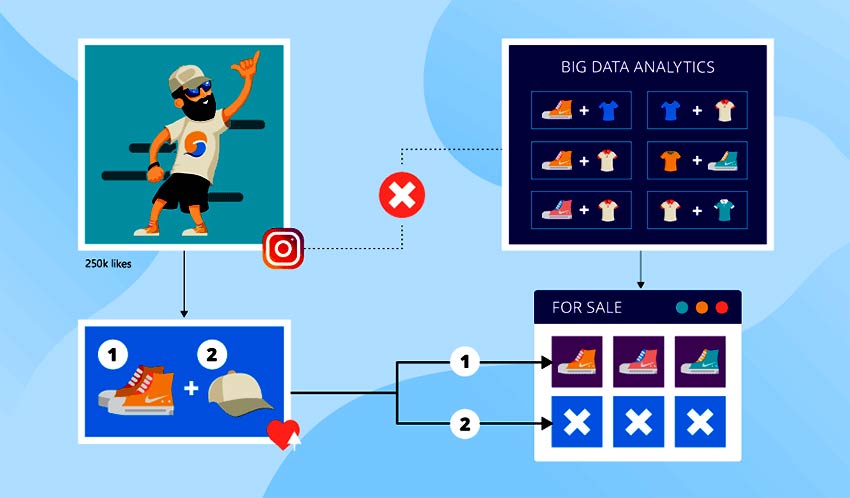

7. Creating Meaning with Data

You might have the information. It’s neat, accurate, and well-organized. However, how do you use it to provide valuable insights to help you improve your business?

Many businesses are turning to powerful data analysis tools, data scientists, data analysts, and data engineers to help them assess the big picture while also breaking down the data into meaningful bits of information that can then be transformed into actionable results.

Solutions: Whether it’s a consistent reporting structure or a dedicated analytics team, make sure your data is translating into measurable results. This entails taking data and transforming it into actions for the business to take in order to produce business wins.

8. Advancement of Technology

Each subsequent technological advancement builds more quickly on the previous one because they evolve at each step to become more efficient and thus can better inform what comes next. Consider how quickly Cloud Computing and Artificial Intelligence are evolving.

You don’t want your data tools to become obsolete due to the rapid advancement of technology and systems, especially if you’ve invested time, energy, and human resources in them.

Solution:

You can’t stop progress, but you can prepare for it. This starts with staying up to date on information technology and its new features, products, and threats.

9. Data Integration

Big Data integration is the process of combining data from various business departments into a single version of the truth that is accessible to all members of the organization.

However, the IT team finds it difficult to manage Big Data that comes from a variety of software and hardware platforms and in a variety of formats.

The presence of numerous and distinct data processing platforms makes it difficult for organizations to simplify their IT infrastructure in pursuit of simple data handling and managing Big Data process flows. This is a significant challenge for the IT departments.

Solution:

Don’t try to do it by hand. It may appear simpler and less expensive at first glance, but it will not be cost-effective in the long run.

Select software automation tools that include hundreds of pre-built APIs, files, and databases. While you may need to hand-code some APIs on occasion, these tools can handle the majority of the work.

Conclusion

In today’s data-driven world, data management is critical and should not be overlooked. You must be proactive in understanding and implementing data solutions that support your business objectives. You can effectively mitigate any Big Data problems by doing so.

If your company follows these guidelines religiously, it has a good chance of defeating the Scary Nine. Also, if you need expert advice on a variety of issues, please do not hesitate to contact Brainvire experts. Best wishes on your Big Data journey!

Related Articles

-

Why Businesses Should Turn To JavaScript For Web Development?

JavaScript- the open source scripting language is considered as the world’s most elegant programming language. The technology enable reliable website development at speed in a much efficient manner. This is

-

VAT In UAE Is All Set To Launch, Is Your System VAT-Ready?

The time is finally coming closer. 1st Jan, 2018 is just a few months away and GCC is well-prepared for the VAT launch to decrease the fiscal deficit in UAE.

-

Develop Robust API Roadmap – A Comprehensive Guide

If you work for a mobile app development company or are a mobile app developer, working around API must be a daily grind. This high-end technology is increasingly revolutionizing the